Be part of our every day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Be taught Extra

Liquid AI, the Boston-based basis mannequin startup spun out of the Massachusetts Institute of Expertise (MIT), is looking for to maneuver the tech {industry} past its reliance on the Transformer structure underpinning hottest massive language fashions (LLMs) akin to OpenAI’s GPT collection and Google’s Gemini household.

Yesterday, the corporate introduced “Hyena Edge,” a brand new convolution-based, multi-hybrid mannequin designed for smartphones and different edge units upfront of the Worldwide Convention on Studying Representations (ICLR) 2025.

The convention, one of many premier occasions for machine studying analysis, is going down this 12 months in Vienna, Austria.

New convolution-based mannequin guarantees sooner, extra memory-efficient AI on the edge

Hyena Edge is engineered to outperform sturdy Transformer baselines on each computational effectivity and language mannequin high quality.

In real-world assessments on a Samsung Galaxy S24 Extremely smartphone, the mannequin delivered decrease latency, smaller reminiscence footprint, and higher benchmark outcomes in comparison with a parameter-matched Transformer++ mannequin.

A brand new structure for a brand new period of edge AI

In contrast to most small fashions designed for cellular deployment — together with SmolLM2, the Phi fashions, and Llama 3.2 1B — Hyena Edge steps away from conventional attention-heavy designs. As a substitute, it strategically replaces two-thirds of grouped-query consideration (GQA) operators with gated convolutions from the Hyena-Y household.

The brand new structure is the results of Liquid AI’s Synthesis of Tailor-made Architectures (STAR) framework, which makes use of evolutionary algorithms to mechanically design mannequin backbones and was introduced again in December 2024.

STAR explores a variety of operator compositions, rooted within the mathematical concept of linear input-varying methods, to optimize for a number of hardware-specific goals like latency, reminiscence utilization, and high quality.

Benchmarked straight on client {hardware}

To validate Hyena Edge’s real-world readiness, Liquid AI ran assessments straight on the Samsung Galaxy S24 Extremely smartphone.

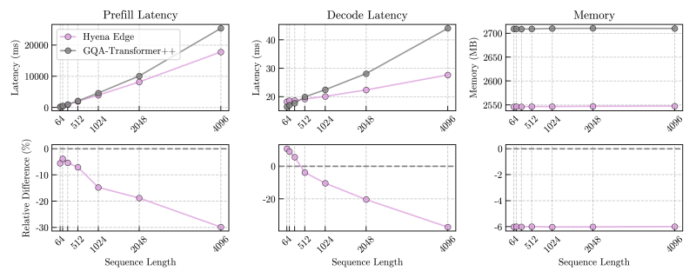

Outcomes present that Hyena Edge achieved as much as 30% sooner prefill and decode latencies in comparison with its Transformer++ counterpart, with pace benefits rising at longer sequence lengths.

Prefill latencies at brief sequence lengths additionally outpaced the Transformer baseline — a vital efficiency metric for responsive on-device functions.

By way of reminiscence, Hyena Edge persistently used much less RAM throughout inference throughout all examined sequence lengths, positioning it as a powerful candidate for environments with tight useful resource constraints.

Outperforming Transformers on language benchmarks

Hyena Edge was educated on 100 billion tokens and evaluated throughout customary benchmarks for small language fashions, together with Wikitext, Lambada, PiQA, HellaSwag, Winogrande, ARC-easy, and ARC-challenge.

On each benchmark, Hyena Edge both matched or exceeded the efficiency of the GQA-Transformer++ mannequin, with noticeable enhancements in perplexity scores on Wikitext and Lambada, and better accuracy charges on PiQA, HellaSwag, and Winogrande.

These outcomes recommend that the mannequin’s effectivity positive aspects don’t come at the price of predictive high quality — a standard tradeoff for a lot of edge-optimized architectures.

Hyena Edge Evolution: A have a look at efficiency and operator traits

For these looking for a deeper dive into Hyena Edge’s growth course of, a latest video walkthrough gives a compelling visible abstract of the mannequin’s evolution.

The video highlights how key efficiency metrics — together with prefill latency, decode latency, and reminiscence consumption — improved over successive generations of structure refinement.

It additionally provides a uncommon behind-the-scenes have a look at how the inner composition of Hyena Edge shifted throughout growth. Viewers can see dynamic modifications within the distribution of operator varieties, akin to Self-Consideration (SA) mechanisms, varied Hyena variants, and SwiGLU layers.

These shifts provide perception into the architectural design rules that helped the mannequin attain its present degree of effectivity and accuracy.

By visualizing the trade-offs and operator dynamics over time, the video gives useful context for understanding the architectural breakthroughs underlying Hyena Edge’s efficiency.

Open-source plans and a broader imaginative and prescient

Liquid AI mentioned it plans to open-source a collection of Liquid basis fashions, together with Hyena Edge, over the approaching months. The corporate’s objective is to construct succesful and environment friendly general-purpose AI methods that may scale from cloud datacenters down to non-public edge units.

The debut of Hyena Edge additionally highlights the rising potential for various architectures to problem Transformers in sensible settings. With cellular units more and more anticipated to run refined AI workloads natively, fashions like Hyena Edge might set a brand new baseline for what edge-optimized AI can obtain.

Hyena Edge’s success — each in uncooked efficiency metrics and in showcasing automated structure design — positions Liquid AI as one of many rising gamers to look at within the evolving AI mannequin panorama.

Each day insights on enterprise use circumstances with VB Each day

If you wish to impress your boss, VB Each day has you lined. We provide the inside scoop on what corporations are doing with generative AI, from regulatory shifts to sensible deployments, so you’ll be able to share insights for max ROI.

Thanks for subscribing. Take a look at extra VB newsletters right here.

An error occured.

I love how you write—it’s like having a conversation with a good friend. Can’t wait to read more!This post pulled me in from the very first sentence. You have such a unique voice!Seriously, every time I think I’ll just skim through, I end up reading every word. Keep it up!Your posts always leave me thinking… and wanting more. This one was no exception!Such a smooth and engaging read—your writing flows effortlessly. Big fan here!Every time I read your work, I feel like I’m right there with you. Beautifully written!You have a real talent for storytelling. I couldn’t stop reading once I started.The way you express your thoughts is so natural and compelling. I’ll definitely be back for more!Wow—your writing is so vivid and alive. It’s hard not to get hooked!You really know how to connect with your readers. Your words resonate long after I finish reading.

Looks like my earlier comment didn’t appear, but I just wanted to say—your blog is so inspiring! I’m still figuring things out as a beginner,and reading your posts makes me want to keep going with my own writing journey.