This publish is co-written with Haya Axelrod Stern, Zion Rubin and Michal Urbanowicz from Pure Intelligence.

Many organizations flip to information lakes for the pliability and scale wanted to handle giant volumes of structured and unstructured information. Nonetheless, migrating an current information lake to a brand new desk format reminiscent of Apache Iceberg can deliver vital technical and organizational challenges

Pure Intelligence (NI) is a world chief in multi-category marketplaces. NI’s main manufacturers, Top10.com and BestMoney.com, assist thousands and thousands of individuals worldwide to make knowledgeable selections daily. Lately, NI launched into a journey to transition their legacy information lake from Apache Hive to Apache Iceberg.

On this weblog publish, NI shares their journey, the revolutionary options developed, and the important thing takeaways that may information different organizations contemplating an analogous path.

This text particulars NI’s sensible strategy to this advanced migration, focusing much less on Apache Iceberg’s technical specs, however relatively on the real-world challenges and options encountered throughout the transition to Apache Iceberg, a problem that many organizations are grappling with.

Why Apache Iceberg?

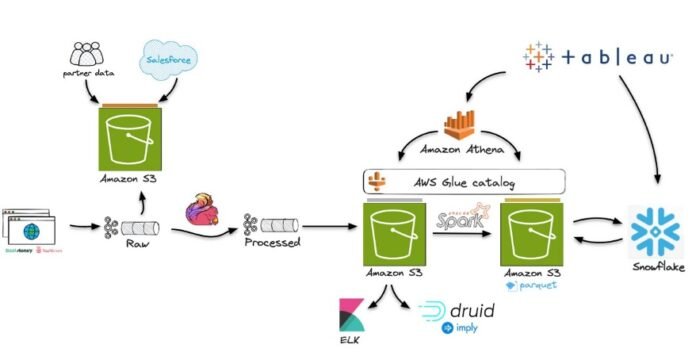

The structure at NI adopted the generally used medallion structure, comprised of a bronze-silver-gold layered framework, proven within the determine that follows:

Bronze layer: Unprocessed information from varied sources, saved in its uncooked format in Amazon Easy Storage Service (Amazon S3), ingested by means of Apache Kafka brokers.

Silver layer: Accommodates cleaned and enriched information, processed utilizing Apache Flink.

Gold layer: Holds analytics-ready datasets designed for enterprise intelligence (BI) and reporting, produced utilizing Apache Spark pipelines, and consumed by providers reminiscent of Snowflake, Amazon Athena, Tableau, and Apache Druid. The information is saved in Apache Parquet format with AWS Glue Catalog offering metadata administration.

Whereas this structure supported NI analytical wants, it lacked the pliability required for a very open and adaptable information platform. The gold layer was coupled solely with question engines that supported Hive and AWS Glue Knowledge Catalog. It was attainable to make use of Amazon Athena nonetheless Snowflake required sustaining one other catalog with the intention to question these exterior tables. This difficulty made it troublesome to guage or undertake various instruments and engines with out pricey information duplication, question rewrite information catalog synchronization. As enterprise scaled, NI wanted a knowledge platform that would seamlessly help a number of question engines concurrently with a single information catalog and avoiding any vendor lock-in.

The ability of Apache Iceberg

Apache Iceberg emerged as the proper resolution—a versatile, open desk format that aligns with NI’s strategy of Knowledge Lake First. Iceberg provides a number of essential benefits reminiscent of ACID transactions, schema evolution, time journey, efficiency enhancements and extra. However the important thing strategic advantages lay within the capacity to help a number of question engines concurrently. It additionally has the next benefits:

Decoupling of storage and compute: The open desk format lets you separate the storage layer from the question engine, permitting a straightforward swap and help for a number of engines concurrently with out information duplication.

Vendor independence: As an open desk format, Apache Iceberg prevents vendor lock-in, supplying you with the pliability to adapt to altering analytics wants.

Vendor adoption: Apache Iceberg is extensively supported by main platforms and instruments, offering seamless integration and long-term ecosystem compatibility.

By transitioning to Iceberg, NI was capable of embrace a very open information platform, offering long-term flexibility, scalability, and interoperability whereas sustaining a unified supply of reality for all analytics and reporting wants.

Challenges confronted

Migrating a dwell manufacturing information lake to Iceberg was difficult due to operational complexities and legacy constraints. The information service at NI runs a whole lot of Spark and machine studying pipelines, manages 1000’s of tables, and helps over 400 dashboards—all working 24/7. Any migration would have to be executed with out manufacturing interruptions; and coordinating such a migration whereas operations proceed seamlessly was daunting.

NI wanted to accommodate numerous customers with various necessities and timelines from information engineers to information analysts all the way in which to information scientists and BI groups.

Including to the problem had been legacy constraints. A number of the current instruments didn’t totally help Iceberg, so there was a necessity to take care of Hive-backed tables for compatibility. As NI realized that not all customers might undertake Iceberg instantly. A plan was required to permit for incremental transitions with out downtime or disruption to ongoing operations.

Key pillars for migration

To assist guarantee a easy and profitable transition, six essential pillars had been outlined:

Help ongoing operations: Preserve uninterrupted compatibility with current techniques and workflows throughout the migration course of.

Person transparency: Reduce disruption for customers by preserving current desk names and entry patterns.

Gradual shopper migration: Enable customers to undertake Iceberg at their very own tempo, avoiding a pressured, simultaneous switchover.

ETL flexibility: Migrate ETL pipelines to Iceberg with out imposing constraints on improvement or deployment.

Value effectiveness: Reduce storage and compute duplication and overhead throughout the migration interval.

Reduce upkeep: Scale back the operational burden of managing twin desk codecs (Hive and Iceberg) throughout the transition.

Evaluating conventional migration approaches

Apache Iceberg helps two predominant approaches for migration: In-place and rewrite-based migration.

In-place migration

The way it works: Converts an current dataset into an Iceberg desk with out duplicating information by creating Iceberg metadata on prime of the prevailing recordsdata whereas preserving their format and format.

Benefits:

Value-effective by way of storage (no information duplication)

Simplified implementation

Maintains current desk names and places

No information motion and minimal compute necessities, translating into decrease price

Disadvantages:

Downtime required: All write operations should be paused throughout conversion, which was unacceptable in NI circumstances as a result of information and analytics are thought-about mission essential and run 24/7

No gradual adoption: All customers should swap to Iceberg concurrently, rising the chance of disruption

Restricted validation: No alternative to validate information earlier than cutover; rollback requires restoring from backups

Technical constraints: Schema evolution throughout migration might be difficult; information sort incompatibilities can halt the whole course of

Rewrite-based migration

The way it works: Rewrite-based migration in Apache Iceberg entails creating a brand new Iceberg desk by rewriting and reorganizing current dataset recordsdata into Iceberg’s optimized format and construction for improved efficiency and information administration.

Benefits:

Zero downtime throughout migration

Helps gradual shopper migration

Allows thorough validation

Easy rollback mechanism

Disadvantages:

Useful resource overhead: Double storage and compute prices throughout migration

Upkeep complexity: Managing two parallel information pipelines will increase operational burden

Consistency challenges: Sustaining excellent consistency between the 2 techniques is difficult

Efficiency influence: Elevated latency due to twin writes; potential pipeline slowdowns

Why neither choice alone was adequate

NI determined that neither choice might meet all essential necessities:

In-place migration fell brief due to unacceptable downtime and lack of help for gradual migration.

Rewrite-based migration fell brief due to prohibitive price overhead and complicated operational administration.

This evaluation led NI to develop a hybrid strategy that mixes the benefits of each strategies whereas mitigating and minimizing limitations.

The hybrid resolution

The hybrid migration technique was designed round 5 foundational parts, utilizing AWS analytical providers for orchestration, processing, and state administration.

Hive-to-Iceberg CDC: Routinely synchronize Hive tables with Iceberg utilizing a customized change information seize (CDC) course of to help current customers. Not like conventional CDC specializing in row-level adjustments, the method was executed on the partition-level to protect Hive’s habits of updating tables by overwriting partitions. This helps make sure that information consistency is maintained between Hive and Iceberg with out logic adjustments on the migration section, ensuring that the identical information exists on each tables.

Steady schema synchronization: Schema evolution throughout the migration launched upkeep challenges. Automated schema sync processes in contrast Hive and Iceberg schemas, reconciling variations whereas sustaining sort compatibility.

Iceberg-to-Hive reverse CDC: To allow the information crew to transition extract, remodel, and cargo (ETL) jobs to jot down on to Iceberg whereas sustaining compatibility with current Hive-based processes not but migrated, a reverse CDC from Iceberg to Hive was applied. This allowed ETLs to jot down to Iceberg whereas sustaining Hive tables for downstream processes that had not but migrated and nonetheless relied on them throughout the migration interval.

Alias administration in Snowflake: Snowflake aliases made certain that Iceberg tables retained their unique names, making the transition clear to customers. This strategy minimized reconfiguration efforts throughout dependent groups and workflows.

Desk alternative: Swap manufacturing tables whereas retaining unique names, finishing the migration.

Technical deep dive

The migration to from Hive to Iceberg was constructed of a number of steps:

1. Hive-to-Iceberg CDC pipeline

Goal: Hold Hive and Iceberg tables synchronized with out duplicating effort.

The previous determine demonstrates how each partition written to the Hive desk is robotically and transparently copied to the Iceberg desk utilizing a CDC course of. This course of makes certain that each tables are synchronized, enabling a seamless and incremental migration with out disrupting downstream techniques. NI selected partition-level synchronization as a result of the legacy Hive ETL jobs already wrote updates by overwriting complete partitions and updating the partition location. Adopting that very same strategy within the CDC pipeline helped make sure that it remained in line with how information was initially managed, making the migration smoother and avoiding the necessity to rework row-level logic.

Implementation:

To maintain Hive and Iceberg tables synchronized with out duplicating effort, a streamlined pipeline was applied. Every time partitions in Hive tables are up to date, the AWS Glue Catalog emits occasions reminiscent of UpdatePartition. Amazon EventBridge captured these occasions, filtered them for the related databases and tables in response to the occasion bridge rule, and triggered an AWS Lambda This perform parsed the occasion metadata and despatched the partition updates to an Apache Kafka matter.

A Spark job working on Amazon EMR consumed the messages from Kafka, which contained the up to date partition particulars from the Knowledge Catalog occasions. Utilizing that occasion metadata, the Spark job queried the related Hive desk, and wrote it to Iceberg desk in Amazon S3 utilizing the Spark Iceberg overwritePartitions API, as proven within the following instance:

{

“id”:”10397e54-c049-fc7b-76c8-59e148c7cbfc”,

“detail-type”:”Glue Knowledge Catalog Desk State Change”,

“supply”:”aws.glue”,

“time”:”2024-10-27T17:16:21Z”,

“area”:”us-east-1″,

“element”:{

“databaseName”:”dlk_visitor_funnel_dwh_production”,

“changedPartitions”:(

“2024-10-27”

),

“typeOfChange”:”UpdatePartition”,

“tableName”:”fact_events”

}

}

By focusing on solely modified partitions, the pipeline (proven within the following determine) considerably decreased the necessity for pricey full-table rewrites. Iceberg’s sturdy metadata layers, together with snapshots and manifest recordsdata, had been seamlessly up to date to seize these adjustments, offering environment friendly and correct synchronization between Hive and Iceberg tables.

2. Iceberg-to-Hive reverse CDC pipeline

Goal: Help Hive customers whereas permitting ETL pipelines to transition to Iceberg.

The previous determine exhibits the reverse course of, the place each partition written to the Iceberg desk is robotically and transparently copied to the Hive desk utilizing a CDC mechanism. This course of helps guarantee synchronization between the 2 techniques, enabling seamless information updates for legacy techniques that also depend on Hive whereas transitioning to Iceberg.

Implementation:

Synchronizing information from Iceberg tables again to Hive tables introduced a distinct problem. Not like Hive tables, Knowledge Catalog doesn’t observe partition updates for Iceberg tables as a result of partitions in Iceberg are managed internally and never inside the catalog. This meant NI couldn’t depend on Glue Catalog occasions to detect partition adjustments.

To handle this, NI applied an answer much like the earlier stream however tailored to Iceberg’s structure. Apache Spark was used to question Iceberg’s metadata tables—particularly the snapshots and entries tables—to establish the partitions modified for the reason that final synchronization. The question used was:

SELECT e.data_file.partition, MAX(s.committed_at) AS last_modified_time

FROM $target_table.snapshots JOIN $target_table.entries e ON s.snapshot_id = e.snapshot_id

WHERE s.committed_at > ‘$last_sync_time’

GROUP BY e.data_file.partition;

This question returned solely the partitions that had been up to date for the reason that final synchronization, enabling it to focus solely on the modified information. Utilizing this info, much like the sooner course of, a Spark job retrieved the up to date partitions from Iceberg and wrote them again to the corresponding Hive desk, offering seamless synchronization between each tables.

3. Steady schema synchronization

Goal: Automate schema updates to take care of consistency throughout Hive and Iceberg.

The previous determine exhibits how the automated schema sync course of helps guarantee consistency between Hive and Iceberg tables schemas by robotically synchronizing schema adjustments. On this instance including the Channel column, minimizing handbook work and double upkeep throughout the prolonged migration interval.

Implementation:

To deal with schema adjustments between Hive and Iceberg, a course of was applied to detect and reconcile variations robotically. When a schema change occurs in a Hive desk, Knowledge Catalog emits an UpdateTable occasion. This occasion triggers a Lambda perform (routed by means of EventBridge), which retrieves the up to date schema from Knowledge Catalog for the Hive desk and compares it to the Iceberg schema. It’s essential to name out that in NI’s setup, schema adjustments originate from Hive as a result of the Iceberg desk is hidden behind aliases throughout the system. As a result of Iceberg is primarily used for Snowflake, a one-way sync from Hive to Iceberg is enough. In consequence, there isn’t a mechanism to detect or deal with schema adjustments made immediately in Iceberg, as a result of they aren’t wanted within the present workflow.

In the course of the schema reconciliation (proven within the following determine), information sorts are normalized to assist guarantee compatibility—for instance, changing Hive’s VARCHAR to Iceberg’s STRING. Any new fields or sort adjustments are validated and utilized to the Iceberg schema utilizing a Spark job working on Amazon EMR. Amazon DynamoDB shops schema synchronization checkpoints which permit monitoring adjustments over time and preserve consistency between the Hive and Iceberg schemas.

By automating this schema synchronization, upkeep overhead was considerably decreased and freed builders from manually maintaining schemas in sync, making the lengthy migration interval considerably extra manageable.

The previous determine depicts an automatic workflow to take care of schema consistency between Hive and Iceberg tables. AWS Glue captures desk state change occasions from Hive, which set off an EventBridge occasion. The occasion invokes a Lambda perform that fetches metadata from DynamoDB and compares schemas fetched from AWS Glue for each Hive and Iceberg tables. If a mismatch is detected, the schema in Iceberg is up to date to assist guarantee alignment, minimizing handbook intervention and supporting easy operation throughout the migration.

4. Alias administration in Snowflake

Goal: Allow Snowflake customers to undertake Iceberg with out altering question references.

The previous determine exhibits how Snowflake aliases allow seamless migration by mapping queries like SELECT platform, COUNT(clickouts) FROM funnel.clickouts to Iceberg tables within the Glue Catalog. Even with suffixes added throughout the Iceberg migration, current queries and workflows stay unchanged, minimizing disruption for BI instruments and analysts.

Implementation:

To assist guarantee a seamless expertise for BI instruments and analysts throughout the migration, Snowflake aliases had been used to map exterior tables to the Iceberg metadata saved in Knowledge Catalog. By assigning aliases that matched the unique Hive desk names, current queries and reviews had been preserved with out interruption. For instance, an exterior desk was created in Snowflake and aliased it to the unique desk title, as proven within the following question:

CREATE OR REPLACE ICEBERG TABLE dlk_visitor_funnel_dwh_production.aggregated_cost

EXTERNAL_VOLUME = ‘s3_dlk_visitor_funnel_dwh_production_iceberg_migration’

CATALOG = ‘glue_dlk_visitor_funnel_dwh_production_iceberg_migration’

CATALOG_TABLE_NAME = ‘aggregated_cost’;

ALTER ICEBERG TABLE dlk_visitor_funnel_dwh_production.aggregated_cost REFRESH;

When migration was accomplished, a easy change again to the alias was executed to level to the brand new location or schema, making the transition seamless and minimizing any disruption to person workflows.

5. Desk alternative

Goal: When all ETLs and associated information workflows had been efficiently transitioned to make use of Apache Iceberg’s capabilities, and every thing was functioning appropriately with the synchronization stream, it was time to maneuver on to the ultimate section of the migration. The first goal was to take care of the unique desk names, avoiding using any prefixes like these employed within the earlier, intermediate migration steps. This helped make sure that the configuration remained tidy and free from pointless naming problems.

The previous determine exhibits the desk alternative to finish the migration, the place Hive on Amazon EMR was used to register Parquet recordsdata as Iceberg tables whereas preserving unique desk names and avoiding information duplication, serving to to make sure a seamless and tidy migration.

Implementation:

One of many challenges was that renaming tables isn’t attainable inside AWS Glue, which prevents using an easy renaming strategy for the prevailing synchronization stream tables. As well as, AWS Glue doesn’t help the Migrate process, which creates Iceberg metadata on prime of the prevailing information file whereas preserving the unique desk title. The technique to beat this limitation was to make use of a Hive metastore on an Amazon EMR cluster. Through the use of Hive on Amazon EMR, NI was capable of create the ultimate tables with their unique names as a result of it operates in a separate metastore atmosphere, giving the pliability to outline any required schema and desk names with out interference.

The add_files process was used to methodically register all the prevailing Parquet recordsdata, thus developing all essential metadata inside Hive. This was a vital step, as a result of it helped make sure that all information recordsdata had been appropriately cataloged and linked inside the metastore.

The previous determine exhibits the transition of a manufacturing desk to Iceberg by utilizing the add_files process to register current Parquet recordsdata and create Iceberg metadata. This helped guarantee a easy migration whereas preserving the unique information and avoiding duplication.

This setup allowed using current Parquet recordsdata with out duplicating information, thus saving assets. Though the sync stream used separate buckets for the ultimate structure, NI selected to take care of the unique buckets and cleaned the intermediate recordsdata. This resulted in a distinct folder construction on Amazon S3. The historic information had subfolders for every partition underneath the basis desk listing, whereas the brand new Iceberg information organizes subfolders inside a knowledge folder. This distinction was acceptable to keep away from information duplication and protect the unique Amazon S3 buckets.

Technical recap

The AWS Glue Knowledge Catalog served as the first supply of reality for schema and desk updates, with Amazon EventBridge capturing Knowledge Catalog occasions to set off synchronization workflows. AWS Lambda parsed occasion metadata and managed schema synchronization, whereas Apache Kafka buffered occasions for real-time processing. Apache Spark on Amazon EMR dealt with information transformations and incremental updates, and Amazon DynamoDB maintained state, together with synchronization checkpoints and desk mappings. Lastly, Snowflake seamlessly consumed Iceberg tables by way of aliases with out disrupting current workflows.

Migration consequence

The migration was accomplished with zero downtime; steady operations had been maintained all through the migration, supporting a whole lot of pipelines and dashboards with out interruption. The migration was executed with a value optimized mindset with incremental updates and partition-level synchronization that minimized the utilization of compute and storage assets. Lastly, NI Established a contemporary, vendor-neutral platform that permits scaling their evolving analytics and machine studying wants. It allows seamless integration with a number of compute and question engines, supporting flexibility and additional innovation.

Conclusion

Pure intelligence migration to Apache Iceberg was a pivotal step in modernizing the corporate’s information infrastructure. By adopting a hybrid technique and utilizing the facility of event-driven architectures, NI helped guarantee a seamless transition that balanced innovation with operational stability. The journey underscored the significance of cautious planning, understanding the information ecosystem, and specializing in an organization-first strategy.

Above all, enterprise was saved in focus and continuity prioritized the person expertise. By doing so, NI unlocked the pliability and scalability of their information lake whereas minimizing disruption, permitting groups to make use of cutting-edge analytics capabilities, positioning the corporate on the forefront of contemporary information administration and readiness for the longer term.

If you happen to’re contemplating an Apache Iceberg migration or dealing with related information infrastructure challenges, we encourage you to discover the chances. Embrace open codecs, use automation, and design together with your group’s distinctive wants in thoughts. The journey is likely to be advanced, however the rewards in scalability, flexibility, and innovation are nicely well worth the effort. You need to use the AWS prescriptive information to assist study extra about the right way to finest use Apache Iceberg to your group

In regards to the Authors

Yonatan Dolan is a Principal Analytics Specialist at Amazon Internet Companies. Yonatan is an Apache Iceberg evangelist.

Yonatan Dolan is a Principal Analytics Specialist at Amazon Internet Companies. Yonatan is an Apache Iceberg evangelist.

Haya Stern is a Senior Director of Knowledge at Pure Intelligence. She leads the event of NI’s large-scale information platform, with a deal with enabling analytics, streamlining information workflows, and bettering dev effectivity. Previously yr, she led the profitable migration from the earlier information structure to a contemporary lake home primarily based on Apache Iceberg and Snowflake.

Haya Stern is a Senior Director of Knowledge at Pure Intelligence. She leads the event of NI’s large-scale information platform, with a deal with enabling analytics, streamlining information workflows, and bettering dev effectivity. Previously yr, she led the profitable migration from the earlier information structure to a contemporary lake home primarily based on Apache Iceberg and Snowflake.

Zion Rubin is a Knowledge Architect at Pure Intelligence with ten years of expertise architecting giant‑scale massive‑information platforms, now centered on growing clever agent techniques that flip advanced information into actual‑time enterprise perception.

Knowledge Architect at Pure Intelligence with ten years of expertise architecting giant‑scale massive‑information platforms, now centered on growing clever agent techniques that flip advanced information into actual‑time enterprise perception.

Michał Urbanowicz is a Cloud Knowledge Engineer at Pure Intelligence with experience in migrating information warehouses and implementing sturdy retention, cleanup, and monitoring processes to make sure scalability and reliability. He additionally develops automations that streamline and help marketing campaign administration operations in cloud-based environments.

Michał Urbanowicz is a Cloud Knowledge Engineer at Pure Intelligence with experience in migrating information warehouses and implementing sturdy retention, cleanup, and monitoring processes to make sure scalability and reliability. He additionally develops automations that streamline and help marketing campaign administration operations in cloud-based environments.