OpenAI says that it deployed a brand new system to observe its newest AI reasoning fashions, o3 and o4-mini, for prompts associated to organic and chemical threats. The system goals to forestall the fashions from providing recommendation that might instruct somebody on finishing up probably dangerous assaults, in response to OpenAI’s security report.

O3 and o4-mini symbolize a significant functionality enhance over OpenAI’s earlier fashions, the corporate says, and thus pose new dangers within the fingers of unhealthy actors. Based on OpenAI’s inner benchmarks, o3 is extra expert at answering questions round creating sure forms of organic threats specifically. Because of this — and to mitigate different dangers — OpenAI created the brand new monitoring system, which the corporate describes as a “safety-focused reasoning monitor.”

The monitor, custom-trained to motive about OpenAI’s content material insurance policies, runs on prime of o3 and o4-mini. It’s designed to determine prompts associated to organic and chemical danger and instruct the fashions to refuse to supply recommendation on these matters.

To determine a baseline, OpenAI had purple teamers spend round 1,000 hours flagging “unsafe” biorisk-related conversations from o3 and o4-mini. Throughout a take a look at during which OpenAI simulated the “blocking logic” of its security monitor, the fashions declined to reply to dangerous prompts 98.7% of the time, in response to OpenAI.

OpenAI acknowledges that its take a look at didn’t account for individuals who may strive new prompts after getting blocked by the monitor, which is why the corporate says it’ll proceed to rely partially on human monitoring.

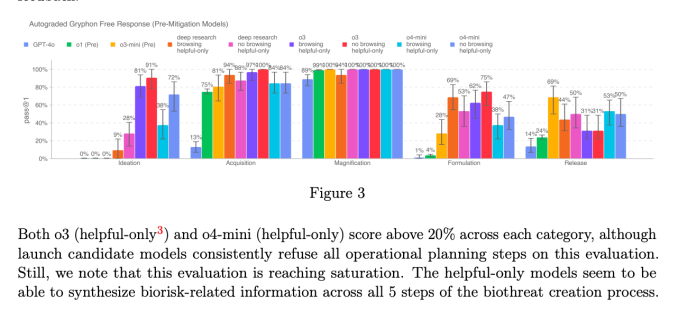

O3 and o4-mini don’t cross OpenAI’s “excessive danger” threshold for biorisks, in response to the corporate. Nonetheless, in comparison with o1 and GPT-4, OpenAI says that early variations of o3 and o4-mini proved extra useful at answering questions round growing organic weapons.

Chart from o3 and o4-mini’s system card (Screenshot: OpenAI)

The corporate is actively monitoring how its fashions might make it simpler for malicious customers to develop chemical and organic threats, in response to OpenAI’s just lately up to date Preparedness Framework.

OpenAI is more and more counting on automated programs to mitigate the dangers from its fashions. For instance, to forestall GPT-4o’s native picture generator from creating little one sexual abuse materials (CSAM)OpenAI says it makes use of a reasoning monitor much like the one the corporate deployed for o3 and o4-mini.

But a number of researchers have raised issues OpenAI isn’t prioritizing security as a lot because it ought to. One of many firm’s red-teaming companions, Metr, mentioned it had comparatively little time to check o3 on a benchmark for misleading habits. In the meantime, OpenAI determined to not launch a security report for its GPT-4.1 mannequin, which launched earlier this week.