This put up is co-written with Roy Ninio from Appsflyer.

Organizations worldwide intention to harness the facility of knowledge to drive smarter, extra knowledgeable decision-making by embedding knowledge on the core of their processes. Utilizing data-driven insights lets you reply extra successfully to sudden challenges, foster innovation, and ship enhanced experiences to your clients. In reality, knowledge has remodeled how organizations drive decision-making, however traditionally, managing the infrastructure to help it posed vital challenges and required particular talent units and devoted personnel. The complexity of organising, scaling, and sustaining large-scale knowledge methods impacted agility and tempo of innovation. This reliance on consultants and complex setups usually diverted sources from innovation, slowed time-to-market, and hindered the power to reply to adjustments in trade calls for.

AppsFlyer is a number one analytics and attribution firm designed to assist companies measure and optimize their advertising and marketing efforts throughout cellular, internet, and related gadgets. With a give attention to privacy-first innovation, AppsFlyer empowers organizations to make data-driven choices whereas respecting consumer privateness and compliance laws. AppsFlyer offers instruments for monitoring consumer acquisition, engagement, and retention, delivering actionable insights to reinforce ROI and streamline advertising and marketing methods.

On this put up, we share how AppsFlyer efficiently migrated their large knowledge infrastructure from self-managed Hadoop clusters to Amazon EMR Serverless, detailing their greatest practices, challenges to beat, and classes realized that may assist information different organizations in comparable transformations.

Why AppsFlyer embraced a serverless method for large knowledge

AppsFlyer manages one of many largest-scale knowledge infrastructures within the trade, processing 100 PB of knowledge each day, dealing with tens of millions of occasions per second, and working 1000’s of jobs throughout practically 100 self-managed Hadoop clusters. The AppsFlyer structure is comprised of many knowledge engineering open supply applied sciences, together with however not restricted to Apache Spark, Apache Kafka, Apache Iceberg, and Apache Airflow. Though this setup has powered operations for years, the rising complexity of scaling sources to satisfy fluctuating calls for, coupled with the operational overhead of sustaining clusters, prompted AppsFlyer to rethink their massive knowledge processing technique.

EMR Serverless is a contemporary, scalable answer that alleviates the necessity for guide cluster administration whereas dynamically adjusting sources to match real-time workload necessities. With EMR Serverless, scaling up or down occurs inside seconds, minimizing idle time and interruptions like spot terminations.

This shift has freed engineering groups to give attention to innovation, improved resilience and excessive availability, and future-proofed the structure to help their ever-increasing calls for. By solely paying for compute and reminiscence sources used throughout runtime, AppsFlyer additionally optimized prices and minimized fees for idle sources, marking a big step ahead in effectivity and scalability.

Answer overview

AppsFlyer’s earlier structure was constructed round self-managed Hadoop clusters working on Amazon Elastic Compute Cloud (Amazon EC2) and dealt with the dimensions and complexity of the info workflows. Though this setup supported operational wants, it required substantial guide effort to keep up, scale, and optimize.

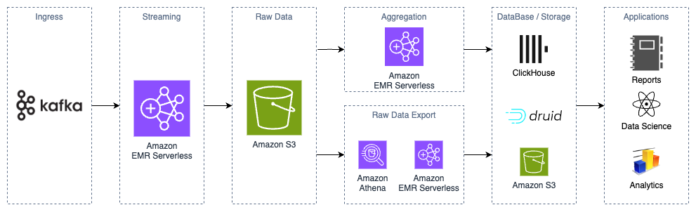

AppsFlyer orchestrated over 100,000 each day workflows with Airflow, managing each streaming and batch operations. Streaming pipelines used Spark Streaming to ingest real-time knowledge from Kafka, writing uncooked datasets to an Amazon Easy Storage Service (Amazon S3) knowledge lake whereas concurrently loading them into BigQuery and Google Cloud Storage to construct logical knowledge layers. Batch jobs then processed this uncooked knowledge, reworking it into actionable datasets for inside groups, dashboards, and analytics workflows. Moreover, some processed outputs had been ingested into exterior knowledge sources, enabling seamless supply of AppsFlyer insights to clients throughout the online.

For analytics and quick queries, real-time knowledge streams had been ingested into ClickHouse and Druid to energy dashboards. Moreover, Iceberg tables had been created from Delta Lake uncooked knowledge and made accessible by Amazon Athena for additional knowledge exploration and analytics.

With the migration to EMR Serverless, AppsFlyer changed its self-managed Hadoop clusters, bringing vital enhancements to scalability, cost-efficiency, and operational simplicity.

Spark-based workflows, together with streaming and batch jobs, had been migrated to run on EMR Serverless and reap the benefits of the elasticity of EMR Serverless, dynamically scaling to satisfy workload calls for.

This transition has considerably diminished operational overhead, assuaging the necessity for guide cluster administration, so groups can focus extra on knowledge processing and fewer on infrastructure.

The next diagram illustrates the answer structure.

This put up evaluations the principle challenges and classes realized by the staff at AppsFlyer from this migration.

Challenges and classes realized

Migrating a large-scale group like AppsFlyer, with dozens of groups, from Hadoop to EMR Serverless was a big problem—particularly as a result of many R&D groups had restricted or no prior expertise managing infrastructure. To offer a easy transition, AppsFlyer’s Knowledge Infrastructure (DataInfra) staff developed a complete migration technique that empowered the R&D groups to seamlessly migrate their pipelines.

On this part, we focus on how AppsFlyer approached the problem and achieved success for all the group.

Centralized preparation by the DataInfra staff

To offer a seamless transition to EMR Serverless, the DataInfra staff took the lead in centralizing preparation efforts:

Clear possession – Taking full accountability for the migration, the staff deliberate, guided, and supported R&D groups all through the method.

Structured migration information – An in depth, step-by-step information was created to streamline the transition from Hadoop, breaking down the complexities and making it accessible to groups with restricted infrastructure expertise.

Constructing a powerful help community

To ensure the R&D groups had the sources they wanted, AppsFlyer established a sturdy help atmosphere:

Knowledge group – The first useful resource for answering technical questions. It inspired data sharing throughout groups and was spearheaded by the DataInfra staff.

Slack help channel – A devoted channel the place the DataInfra staff actively responded to questions and guided groups by the migration course of. This real-time help considerably diminished bottlenecks and helped groups resolve points rapidly.

Infrastructure templates with greatest practices

Recognizing the complexity of the staff’s migration, the DataInfra staff had standardized templates to assist groups begin rapidly and effectively:

Infrastructure as code (IaC) templates – They developed Terraform templates with greatest practices for constructing purposes on EMR Serverless. These templates included code examples and actual manufacturing workflows already migrated to EMR Serverless. Groups may rapidly bootstrap their tasks through the use of these ready-made templates.

Cross-account entry options – Working throughout a number of AWS accounts required managing safe entry between EMR Serverless accounts (the place jobs run) and knowledge storage accounts (the place datasets reside). To streamline this, a step-by-step module was developed for organising cross-account entry utilizing Assume Function permissions. Moreover, a devoted repository was created, so groups can outline and automate position and coverage creation, offering seamless and scalable entry administration.

Airflow integration

As AppsFlyer’s main workflow scheduler, Airflow performs a important position, making it important to supply a seamless transition for its customers.

AppsFlyer developed a devoted Airflow operator for executing Spark jobs on EMR Serverless, rigorously designed to duplicate the performance of the present Hadoop-based Spark operator. As well as, a Python bundle was made out there throughout all Airflow clusters with the related operators. This method minimized code adjustments, permitting groups to transition seamlessly with minimal modifications.

Fixing widespread permission challenges

To streamline permissions administration, AppsFlyer developed focused options for frequent use instances:

Complete documentation – Offered detailed directions for dealing with permissions for companies like Athena, BigQuery, Vault, GIT, Kafka, and lots of extra.

Standardized Spark defaults configuration for groups to use to their purposes – Included built-in options for gathering lineage from Spark jobs working on EMR Serverless, offering accountability and traceability.

Steady engagement with R&D groups

To advertise progress and keep alignment throughout groups, AppsFlyer launched the next measures:

Weekly conferences – Weekly standing conferences to evaluation the standing of every staff’s migration efforts. Groups shared updates, challenges, and commitments, fostering transparency and collaboration.

Help – Proactive help was supplied for points raised throughout conferences to attenuate delays. This made positive that the groups had been on monitor and had the help they wanted to satisfy their commitments.

By implementing these methods, AppsFlyer remodeled the migration course of from a frightening problem right into a structured and well-supported journey. Key outcomes included:

Empowered groups – R&D groups with minimal infrastructure expertise had been capable of confidently migrate their pipelines.

Standardized practices – Infrastructure templates and predefined options supplied consistency and greatest practices throughout the group.

Decreased downtime – The {custom} Airflow operator and detailed documentation minimized disruptions to present workflows.

Cross-account compatibility – With seamless cross-account entry, groups may run jobs and entry knowledge effectively.

Improved collaboration – The info group and Slack help channel fostered a way of collaboration and shared accountability throughout groups.

Migrating a whole group’s knowledge workflows to EMR Serverless is a fancy activity, however by investing in preparation, templates, and help, AppsFlyer efficiently streamlined the method for all R&D groups within the firm.

This method can function a mannequin for organizations endeavor comparable migrations.

Spark utility code administration and deployment

For AppsFlyer knowledge engineers, growing and deploying Spark purposes is a core each day accountability. The Knowledge Platform staff focuses on figuring out and implementing the suitable set of instruments and safeguards that might not solely simplify the migration to EMR Serverless, but in addition streamline ongoing operations.

There are two completely different approaches out there for working Spark code on EMR Serverless: {custom} container pictures and JARs or Python recordsdata. Firstly of the exploration, {custom} pictures regarded promising as a result of it permits higher customization than JARs, which ought to enable the DataInfra staff smoother migration for present workloads. After deeper analysis, it was realized that {custom} pictures have nice energy, however include a value that in massive scale would should be evaluated. Customized pictures offered the next challenges:

Customized pictures are supported as of model 6.9.0, however a few of AppsFlyer’s workloads used earlier variations.

EMR Serverless sources run from the second EMR Serverless begins downloading the picture till employees are stopped. This implies a fee is finished for combination vCPU, reminiscence, and storage sources throughout the picture obtain part.

They required a special steady integration and supply (CI/CD) method than compiling a JAR or Python file, resulting in operational work that needs to be minimized as a lot as doable.

AppsFlyer determined to go all in with JARs and permit solely in distinctive instances, the place the customization required using {custom} pictures. Ultimately, it was realized that utilizing non-custom pictures was appropriate for AppsFlyer use instances.

CI/CD perspective

From a CI/CD perspective, AppsFlyer’s DataInfra staff determined to align with AppsFlyer’s GitOps imaginative and prescient, ensuring that each infrastructure and utility code are version-controlled, constructed, and deployed utilizing Git operations.

The next diagram illustrates the GitOps method AppsFlyer adopted.

JARs steady integration

For CI, the method accountable for constructing the applying artifacts, a number of choices have been explored. The next key concerns drove the exploration course of:

Use Amazon S3 because the native JAR supply for EMR Serverless

Assist completely different variations for a similar job

Assist staging and manufacturing environments

Permit hotfixes, patches, and rollbacks

Utilizing AppsFlyer’s present exterior bundle repository led to challenges, as a result of it required them to construct a {custom} supply into Amazon S3 or a fancy runtime potential to fetch the code externally.

Utilizing Amazon S3 immediately additionally had a number of different approaches:

Buckets – Use single vs. separated buckets for staging and manufacturing

Variations – Use Amazon S3 native object versioning vs. importing a brand new file

Hotfix – Override the identical job’s JAR file vs. importing a brand new one

Lastly, the choice was to go together with immutable builds for constant deployment throughout the environments.

Every Spark job git repository pushes to the principle department, triggers a CI course of to validate the semantic versioning (semver) project, compiles the JAR artifact, and uploads it to Amazon S3. Every artifact is uploaded to 3 completely different paths in line with the model of the JAR, and likewise embody a model tag for the S3 object:

//”.””.”/app.jar

//”.””/app.jar

///app.jar

AppsFlyer can now have deep granularity and assign every EMR Serverless job to a pinpointed model. Some jobs can run with the most recent main model, and different stability and SLA delicate jobs require a lock to a particular patch model.

EMR Serverless steady deployment

Importing the recordsdata to Amazon S3 was the ultimate step within the CI course of, which then results in a special CD course of.

CD is finished by altering the infrastructure code, which is Terraform based mostly, to level to the brand new JAR that was uploaded to Amazon S3. Then the staging or manufacturing utility can begin utilizing the newly uploaded code and the method might be thought-about deployed.

Spark utility rollbacks

In the event that they want an utility rollback, AppsFlyer factors the EMR Serverless job IaC configuration from the present impaired JAR model to the earlier secure JAR model within the related Amazon S3 path.

AppsFlyer believes that each automation impacting manufacturing, like CD, requires a breaking glass mechanism for an emergency scenario. In such instances, AppsFlyer can manually override the wanted S3 object (JAR file) whereas nonetheless utilizing Amazon S3 variations with a view to have higher visibility and guide model management.

Single-job vs. multi-job purposes

When utilizing EMR Serverless, one vital architectural determination is whether or not to create a separate utility for every Spark job or use an automated scaling utility shared throughout a number of Spark jobs. The next desk summarizes these concerns.

Facet

Single-Job Utility

Multi-Job Utility

Logical Nature

Devoted utility for every job.

Shared utility for a number of jobs.

Shared Configurations

Restricted shared configurations; every utility is independently configured.

Permits shared configurations by spark-defaults, together with executors, reminiscence settings, and JARs.

Isolation

Most isolation; every job runs independently.

Maintains job-level isolation by distinct IAM roles regardless of sharing the applying.

Flexibility

Versatile for distinctive configurations or useful resource necessities.

Reduces overhead by reusing configurations and utilizing automated scaling.

Overhead

Greater setup and administration overhead as a result of a number of purposes.

Decrease administrative overhead however requires cautious useful resource competition administration.

Use Circumstances

Appropriate for jobs with distinctive necessities or strict isolation wants.

Splendid for associated workloads that profit from shared settings and dynamic scaling.

By balancing these concerns, AppsFlyer tailor-made its EMR Serverless utilization to effectively meet the calls for of various Spark workloads throughout their groups.

Airflow operator: Simplifying the transition to EMR Serverless

Earlier than the migration to EMR Serverless, AppsFlyer’s groups relied on a {custom} Airflow Spark operator created by the DataInfra staff.

This operator, packaged as a Python library, was built-in into the Airflow atmosphere and have become a key part of the info workflows.

It supplied important capabilities, together with:

Retries and alerts – Constructed-in retry logic and PagerDuty alert integration

AWS role-based entry – Automated fetching of AWS permissions based mostly on position names

Customized defaults – Setting Spark configurations and bundle defaults tailor-made for every job

State administration – Job state monitoring

This operator streamlined working Spark jobs on Hadoop and was extremely tailor-made to AppsFlyer’s necessities.

When shifting to EMR Serverless, the staff selected to construct a {custom} Airflow operator to align with their present Spark-based workflows. They already had dozens of Directed Acyclic Graphs (DAGs) in manufacturing, so with this method, they might keep their acquainted interface, together with {custom} dealing with for retries, alerting, and configurations—all with out requiring broad adjustments throughout the board.

This abstraction supplied a smoother migration by preserving the identical improvement patterns and minimizing the migration efforts of adapting to the native operator semantics.

The DataInfra staff developed a devoted, {custom}, EMR Serverless operator to help the next objectives:

Seamless migration – The operator was designed to carefully mimic the interface of the present Spark operator on Hadoop. This made positive that groups may migrate with minimal code adjustments.

Function parity – They added the options lacking from the native operator:

Constructed-in retry logic.

PagerDuty integration for alerts.

Automated role-based permission fetching.

Default Spark configurations and bundle help for every job.

Simplified integration – It’s packaged as a Python library out there in Airflow clusters. Groups may use the operator similar to they did with the earlier Spark operator.

The {custom} operator abstracts among the underlying configurations required to submit jobs to EMR Serverless, aligning with AppsFlyer’s inside greatest practices and including important options.

The next is from an instance DAG utilizing the operator:

return SparkBatchJobEmrServerlessOperator(

task_id=task_id, # Distinctive activity identifier within the DAG

jar_file=jar_file, # Path to the Spark job JAR file on S3

main_class=””,

spark_conf=spark_conf,

app_id=default_args(“”), # EMR Serverless app ID

execution_role=default_args(“”), # IAM position for job execution

polling_interval_sec=120, # How usually to ballot for job standing

execution_timeout=timedelta(hours=1), # Max allowed runtime

retries=5, # Retry makes an attempt for failed jobs

app_args=(), # Arguments to cross to the Spark job

depends_on_past=True, # Guarantee sequential activity execution

tags={‘proprietor’: ”}, # Metadata for possession

aws_assume_role=””, # Function for cross-account entry

alerting_policy=ALERT_POLICY_CRITICAL.with_slack_channel(sc), # Alerting integration

proprietor=””,

dag=dag # DAG this activity belongs to

)

Cross-account permissions on AWS: Simplifying EMRs workflows

AppsFlyer operates throughout a number of AWS accounts, creating a necessity for safe and environment friendly cross-account entry. EMR Serverless jobs are executed within the manufacturing account, and the info they course of resides in a separate knowledge account. To allow seamless operation, Assume Function permissions are used to confirm that EMR Serverless jobs working within the manufacturing account can entry the info and companies within the knowledge account. The next diagram illustrates this structure.

Beneath is a diagram demonstrating the cross-account permissions AppsFlyer adopted:

Function administration technique

To handle cross-account entry effectively, three distinct roles had been created and maintained:

EMR position – Used for executing and managing EMR Serverless purposes within the manufacturing account. Built-in immediately into Airflow employees to make it out there for the DAGs on the devoted staff Airflow cluster.

Execution position – Assigned to the Spark job working on EMR Serverless. Handed by the EMR position within the DAG code to supply seamless integration.

Knowledge position – Resides within the knowledge account and is assumed by the execution position to entry knowledge saved in Amazon S3 and different AWS companies.

To implement entry boundaries, every position and coverage is tagged with team-specific identifiers.

This makes positive that groups can solely entry their very own knowledge and roles, minimizing unauthorized entry to different groups’ sources.

Simplifying Airflow migration

A streamlined course of to make cross-account permissions clear for groups migrating their workloads to EMR Serverless was developed:

The EMR position is embedded into Airflow employees, making it out there for DAGs within the devoted Airflow cluster for every staff:

{

“Model”:”2012-10-17″,

“Assertion”:(

“…”{

“Impact”:”Permit”,

“Motion”:”iam:PassRole”,

“Useful resource”:”arn:aws:iam::account-id:position/execution-role”,

“Situation”:{

“StringEquals”:{

“iam:ResourceTag/Workforce”:”team-tag”

}

}

}

)

}

The EMR position robotically passes the execution position to the job throughout the DAG code:

{

“Model”: “2012-10-17”,

“Assertion”: (

{

“Impact”: “Permit”,

“Motion”: “sts:AssumeRole”,

“Useful resource”: “arn:aws:iam::data-account-id:position/data-role”,

“Situation”: {

“StringEquals”: {

“iam:ResourceTag/Workforce”: “team-tag”

}

}

}

)

}

The execution position assumes the info position dynamically throughout job execution to entry the required knowledge and companies within the knowledge account:

Permits the Execution Function within the Manufacturing account to imagine the Knowledge Function.

{

“Model”: “2012-10-17”,

“Assertion”: (

{

“Impact”: “Permit”,

“Principal”: {

“AWS”: “arn:aws:iam::production-account-id:position/execution-role”

},

“Motion”: “sts:AssumeRole”

}

)

}

Insurance policies, belief relationships, and position definitions are managed in a devoted GitLab repository. GitLab CI/CD pipelines automate the creation and integration of roles and insurance policies, offering consistency and lowering guide overhead.

Advantages of AppsFlyer’s method

This method supplied the next advantages:

Seamless entry – Groups not have to deal with cross-account permissions manually as a result of these are automated by preconfigured roles and insurance policies, offering seamless and safe entry to sources throughout accounts.

Scalable and safe – Function-based and tag-based permissions present safety and scalability throughout a number of groups and accounts. By utilizing roles and tags, it alleviates the necessity to create separate hardcoded insurance policies for every staff or account. As an alternative, they will outline generalized insurance policies that scale robotically as new sources, accounts, or groups are added.

Automated administration – GitLab CI/CD streamlines the deployment and integration of insurance policies and roles, lowering guide effort whereas enhancing consistency. It additionally minimizes human errors, improves change transparency, and simplifies model administration.

Flexibility for groups – Groups have the pliability to make use of their very own or native EMR Serverless operators whereas sustaining safe entry to knowledge.

By implementing a sturdy, automated cross-account permissions system, AppsFlyer has enabled safe and environment friendly entry to knowledge and companies throughout a number of AWS accounts. This makes positive that groups can give attention to their workloads with out worrying about infrastructure complexities, accelerating their migration to EMR Serverless.

Integrating lineage into EMR Serverless

AppsFlyer developed a sturdy answer for column-level lineage assortment to supply complete visibility into knowledge transformations throughout pipelines. Lineage knowledge is saved in Amazon S3 and subsequently ingested into DataHub, AppsFlyer’s lineage and metadata administration atmosphere.

At present, AppsFlyer collects column-level lineage from quite a lot of sources, together with Amazon Athena, BigQuery, Spark, and extra.

This part focuses on how AppsFlyer collects Spark column-level lineage particularly throughout the EMR Serverless infrastructure.

Accumulating Spark lineage with Spline

To seize lineage from Spark jobs, AppsFlyer makes use of Spline, an open supply instrument designed for automated monitoring of knowledge lineage and pipeline buildings.

AppsFlyer modified Spline’s default habits to output a custom-made Spline object that aligns with AppsFlyer’s particular necessities. AppsFlyer tailored the Spline integration into each legacy and fashionable environments. Within the pre-migration part, they injected the Spline agent into Spark jobs by their custom-made Airflow Spark operator. Within the post-migration part, they built-in Spline immediately into EMR Serverless purposes.

The lineage workflow consists of the next steps:

As Spark jobs execute, Spline captures detailed metadata concerning the queries and transformations carried out.

The captured metadata is exported as Spline object recordsdata to a devoted S3 bucket.

These Spline objects are processed into column-level lineage objects custom-made to suit AppsFlyer’s knowledge structure and necessities.

The processed lineage knowledge is ingested into DataHub, offering a centralized and interactive view of knowledge dependencies.

The next determine is an instance of a lineage diagram from DataHub.

Challenges and the way AppsFlyer addressed them

AppsFlyer encountered the next challenges:

Supporting completely different EMR Serverless purposes – Every EMR Serverless utility has its personal Spark and Scala model necessities.

Various operator utilization – Groups usually use {custom} or native EMR Serverless operators, making uniform Spline integration difficult.

Confirming common adoption – They want to ensure Spark jobs throughout a number of accounts use the Spline agent for lineage monitoring.

AppsFlyer addressed these challenges with the next options:

Model-specific Spline brokers – AppsFlyer created a devoted Spline agent for every EMR Serverless utility model to match its Spark and Scala variations. For instance, EMR Serverless utility model 7.0.1 and Spline.7.0.1.

Spark defaults integration – They built-in the Spline agent into EMR Serverless utility Spark defaults to confirm lineage assortment for jobs executed on the applying—no job-specific modifications wanted.

Automation for compliance – This course of consists of the next steps:

Detect a newly created EMR Serverless utility throughout accounts.

Confirm that Spline is correctly outlined within the utility’s Spark defaults.

Ship a PagerDuty alert to the devoted staff if misconfigurations are detected.

Instance integration with Terraform

To automate Spline integration, AppsFlyer used Terraform and local-exec to outline Spark defaults for EMR Serverless purposes. With Amazon EMR, you’ll be able to set unified Spark configuration properties by spark-defaults, that are then utilized to Spark jobs.

This configuration makes positive the Spline agent is robotically utilized to each Spark job with out requiring modifications to the Airflow operator or the job itself.

This strong lineage integration offers the next advantages:

Full visibility – Automated lineage monitoring offers detailed insights into knowledge transformations

Seamless scalability – Model-specific Spline brokers present compatibility with EMR Serverless purposes

Proactive monitoring – Automated compliance checks confirm that lineage monitoring is persistently enabled throughout accounts

Enhanced governance – Ingesting lineage knowledge into DataHub offers traceability, helps audits, and fosters a deeper understanding of knowledge dependencies

By integrating Spline with EMR Serverless purposes, AppsFlyer has supplied complete and automatic lineage monitoring, so groups can perceive their knowledge pipelines higher whereas assembly compliance necessities. This scalable method aligns with AppsFlyer’s dedication to sustaining transparency and reliability all through their knowledge panorama.

Monitoring and observability

When embarking on a big migration, and as a day-to-day best-practice course of, monitoring and observability are key elements of with the ability to run workloads efficiently for stability, debugging, and price.

AppsFlyer’s DataInfra staff set a number of KPIs for monitoring and observability in EMR Serverless:

Monitor infrastructure-level metrics and logs:

EMR Serverless useful resource utilization, together with value

EMR Serverless API utilization

Monitor Spark application-level metrics and logs:

stdout and stderr logs

Spark engine metrics

Centralized observability over the present environments, Datadog

Metrics

Utilizing EMR Serverless native metrics, AppsFlyer’s DataInfra staff arrange a number of dashboards to help monitoring each the migration and the day-to-day utilization of EMR Serverless throughout the corporate. The next are the principle metrics that had been monitored:

Service quota utilization metrics:

vCPU utilization monitoring (ResourceCount with vCPU dimension)

API utilization monitoring (API precise utilization vs. API limits)

Utility standing metrics:

RunningJobs, SuccessJobs, FailedJobs, PendingJobs, CancelledJobs

Useful resource limits monitoring:

MaxCPUAllowed vs. CPUAllocated

MaxMemoryAllowed vs. MemoryAllocated

MaxStorageAllowed vs. StorageAllocated

Employee-level metrics:

WorkerCpuAllocated vs. WorkerCpuUsed

WorkerMemoryAllocated vs. WorkerMemoryUsed

WorkerEphemeralStorageAllocated vs. WorkerEphemeralStorageUsed

Capability allocation monitoring:

Metrics filtered by CapacityAllocationType (PreInitCapacity vs. OnDemandCapacity)

ResourceCount

Employee kind distribution:

Metrics filtered by WorkerType (SPARK_DRIVER vs. SPARK_EXECUTORS)

Job success charges over time:

SuccessJobs vs. FailedJobs ratio

SubmitedJobs vs. PendingJobs

The next screenshot exhibits an instance of the tracked metrics.

Logs

For logs administration, AppsFlyer’s DataInfra staff explored a number of choices:

Streamlining EMR Serverless log transport to Datadog

As a result of AppsFlyer determined to maintain their logs in an exterior logging atmosphere, the DataInfra staff aimed to scale back the variety of parts concerned within the transport course of and decrease upkeep overhead. As an alternative of managing a Lambda based mostly log shipper, they developed a {custom} Spark plugin that seamlessly exports logs from EMR Serverless to Datadog.

Firms already storing logs in Amazon S3 or CloudWatch Logs can reap the benefits of EMR Serverless native help for these environments. Nevertheless, for groups needing a direct, real-time integration with Datadog, this method alleviates the necessity for further infrastructure, offering a extra environment friendly and maintainable logging answer.

The {custom} Spark plugin gives the next capabilities:

Automated log export – Streams logs from EMR Serverless to Datadog

Fewer further parts – Alleviates the necessity for Lambda based mostly log shippers

Safe API key administration – Makes use of Vault as an alternative of hardcoding credentials

Customizable logging – Helps {custom} Log4j settings and log ranges

Full integration with Spark – Works on each driver and executor nodes

How the plugin works

On this part, we stroll by the parts of how the plugin works and supply a pseudocode overview:

Driver plugin – LoggerDriverPlugin runs on the Spark driver to configure logging. The plugin fetches EMR job metadata, calls Vault to retrieve the Datadog API key, and configures logging settings.

initialize() {

if (consumer supplied log4j.xml) {

Use {custom} log configuration

} else {

Fetch EMR job metadata (utility identify, job ID, tags)

Retrieve Datadog API key from Vault

Apply default logging settings

}

}

Executor plugin – LoggerExecutorPlugin offers constant logging throughout executor nodes. It inherits the motive force’s log configuration and makes positive the executors use constant logging

initialize() {

fetch logging config from Driver

apply log settings (log4j, log ranges)

}

Fundamental plugin – LoggerSparkPlugin registers the motive force and executor plugins in Spark. It serves because the entry level for Spark and applies {custom} logging settings dynamically.

perform registerPlugin() {

return (driverPlugin, executorPlugin);

}

loginToVault(position, vaultAddress) {

create AWS signed request

authenticate with Vault

return vault token

}

getDatadogApiKey(vaultToken, secretPath) {

fetch API key from Vault

return key

}

Arrange the plugin

To arrange the plugin, full the next steps:

Add the next dependencies to your undertaking:

com.AppsFlyer.datacom

emr-serverless-logger-plugin

Configure the Spark plugin. The next code allows the {custom} Spark plugin and assigns the Vault position to entry the Datadog API key:

–conf “spark.plugins=com.AppsFlyer.datacom.emr.plugin.LoggerSparkPlugin”

–conf “spark.datacom.emr.plugin.vaultAuthRole=your_vault_role”

Use a {custom} or default Log4j configuration:

–conf “spark.datacom.emr.plugin.location=classpath:my_custom_log4j.xml”

Set the atmosphere variables for various log ranges. This adjusts the logging for particular packages.

–conf “spark.emr-serverless.driverEnv.ROOT_LOG_LEVEL=WARN”

–conf “spark.executorEnv.ROOT_LOG_LEVEL=WARN”

–conf “spark.emr-serverless.driverEnv.LOG_LEVEL=DEBUG”

–conf “spark.executorEnv.LOG_LEVEL=DEBUG”

Configure the Vault and Datadog API key and confirm safe Datadog API key retrieval.

By adopting this plugin, AppsFlyer was capable of considerably simplify log transport, lowering the variety of shifting elements whereas sustaining real-time log visibility in Datadog. This method offers reliability, safety, and ease of upkeep, making it a great answer for groups utilizing EMR Serverless with Datadog.

Abstract

By way of their migration to EMR Serverless, AppsFlyer achieved a big transformation in staff autonomy and operational effectivity. Particular person groups now have higher freedom to decide on and construct their very own sources with out relying on a central infrastructure staff, and may work extra independently and innovatively. The minimization of spot interruptions, which had been widespread of their earlier self-managed Hadoop clusters, has considerably improved stability and agility of their operations. Due to this autonomy and reliability, mixed with the automated scaling capabilities of EMR Serverless, the AppsFlyer groups can focus extra on knowledge processing and innovation somewhat than infrastructure administration. The result’s a extra environment friendly, versatile, and self-sufficient improvement atmosphere the place groups can higher reply to their particular wants whereas sustaining excessive efficiency requirements.

Ruli Weisbach, AppsFlyer EVP of R&D, says,

“EMR-Serverless is a sport changer for AppsFlyer; we’re capable of save considerably our value with remarkably decrease administration overhead and maximal elasticity.”

If the AppsFlyer method sparked your curiosity and you’re interested by implementing an analogous answer in your group, discuss with the next sources:

Migrating to EMR Serverless can rework your group’s knowledge processing capabilities, providing a completely managed, cloud-based expertise that robotically scales sources and eases the operational complexity of conventional cluster administration, whereas enabling superior analytics and machine studying workloads with higher cost-efficiency.

Concerning the authors

Roy Ninio is an AI Platform Lead with deep experience in scalable knowledge platform and cloud-native architectures. At AppsFlyer, Roy led the design of a high-performance Knowledge Lake dealing with PB of each day occasions, pushed the adoption of EMR Serverless for dynamic massive knowledge processing, and architected lineage and governance methods throughout platforms.

Roy Ninio is an AI Platform Lead with deep experience in scalable knowledge platform and cloud-native architectures. At AppsFlyer, Roy led the design of a high-performance Knowledge Lake dealing with PB of each day occasions, pushed the adoption of EMR Serverless for dynamic massive knowledge processing, and architected lineage and governance methods throughout platforms.

Avichay Marciano is a Sr. Analytics Options Architect at Amazon Internet Companies. He has over a decade of expertise in constructing large-scale knowledge platforms utilizing Apache Spark, fashionable knowledge lake architectures, and OpenSearch. He’s enthusiastic about data-intensive methods, analytics at scale, and it’s intersection with machine studying.

Avichay Marciano is a Sr. Analytics Options Architect at Amazon Internet Companies. He has over a decade of expertise in constructing large-scale knowledge platforms utilizing Apache Spark, fashionable knowledge lake architectures, and OpenSearch. He’s enthusiastic about data-intensive methods, analytics at scale, and it’s intersection with machine studying.

Eitav Arditti is AWS Senior Options Architect with 15 years in AdTech trade, specializing in Serverless, Containers, Platform engineering, and Edge applied sciences. Designs cost-efficient, large-scale AWS architectures that leverage the cloud-native and edge computing to ship scalable, dependable options for enterprise progress.

Eitav Arditti is AWS Senior Options Architect with 15 years in AdTech trade, specializing in Serverless, Containers, Platform engineering, and Edge applied sciences. Designs cost-efficient, large-scale AWS architectures that leverage the cloud-native and edge computing to ship scalable, dependable options for enterprise progress.

Yonatan Dolan is a Principal Analytics Specialist at Amazon Internet Companies. Yonatan is an Apache Iceberg evangelist, serving to clients design scalable, open knowledge lakehouse architectures and undertake fashionable analytics options throughout industries.

Yonatan Dolan is a Principal Analytics Specialist at Amazon Internet Companies. Yonatan is an Apache Iceberg evangelist, serving to clients design scalable, open knowledge lakehouse architectures and undertake fashionable analytics options throughout industries.

I love how you write—it’s like having a conversation with a good friend. Can’t wait to read more!This post pulled me in from the very first sentence. You have such a unique voice!Seriously, every time I think I’ll just skim through, I end up reading every word. Keep it up!Your posts always leave me thinking… and wanting more. This one was no exception!Such a smooth and engaging read—your writing flows effortlessly. Big fan here!Every time I read your work, I feel like I’m right there with you. Beautifully written!You have a real talent for storytelling. I couldn’t stop reading once I started.The way you express your thoughts is so natural and compelling. I’ll definitely be back for more!Wow—your writing is so vivid and alive. It’s hard not to get hooked!You really know how to connect with your readers. Your words resonate long after I finish reading.