In an period the place information drives innovation and decision-making, organizations are more and more targeted on not solely accumulating information however on sustaining its high quality and reliability. Excessive-quality information is crucial for constructing belief in analytics, enhancing the efficiency of machine studying (ML) fashions, and supporting strategic enterprise initiatives.

By utilizing AWS Glue Knowledge High quality, you’ll be able to measure and monitor the standard of your information. It analyzes your information, recommends information high quality guidelines, evaluates information high quality, and supplies you with a rating that quantifies the standard of your information. With this, you can also make assured enterprise choices. With this launch, AWS Glue Knowledge High quality is now built-in with the lakehouse structure of Amazon SageMaker, Apache Iceberg on common function Amazon Easy Storage Service (Amazon S3) buckets, and Amazon S3 Tables. This integration brings collectively serverless information integration, high quality administration, and superior ML capabilities in a unified setting.

This put up explores how you should use AWS Glue Knowledge High quality to take care of information high quality of S3 Tables and Apache Iceberg tables on common function S3 buckets. We’ll focus on methods for verifying the standard of printed information and the way these built-in applied sciences can be utilized to implement efficient information high quality workflows.

Resolution overview

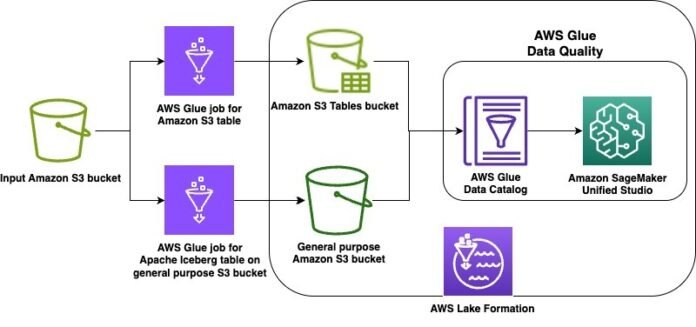

On this launch, we’re supporting the lakehouse structure of Amazon SageMaker, Apache Iceberg on common function S3 buckets, and Amazon S3 Tables. As instance use circumstances, we display information high quality on an Apache Iceberg desk saved in a common function S3 bucket in addition to on Amazon S3 Tables. The steps will cowl the next:

Create an Apache Iceberg desk on a common function Amazon S3 bucket and an Amazon S3 desk in a desk bucket utilizing two AWS Glue extract, remodel, and cargo (ETL) jobs

Grant applicable AWS Lake Formation permissions on every desk

Run information high quality suggestions at relaxation on the Apache Iceberg desk on common function S3 bucket

Run the info high quality guidelines and visualize the ends in Amazon SageMaker Unified Studio

Run information high quality suggestions at relaxation on the S3 desk

Run the info high quality guidelines and visualize the ends in SageMaker Unified Studio

The next diagram is the answer structure.

Conditions

To implement the directions, you should have the next stipulations:

Create S3 tables and Apache Iceberg on common function S3 bucket

First, full the next steps to add information and scripts:

Add the hooked up AWS Glue job scripts to your designated script bucket in S3

create_iceberg_table_on_s3.py

create_s3_table_on_s3_bucket.py

To obtain the New York Metropolis Taxi – Yellow Journey Knowledge dataset for January 2025 (Parquet file), navigate to NYC TLC Journey Document Knowledge, increase 2025, and select Yellow Taxi Journey information beneath January part. A file referred to as yellow_tripdata_2025-01.parquet will probably be downloaded to your pc.

On the Amazon S3 console, open an enter bucket of your selection and create a folder referred to as nyc_yellow_trip_data. The stack will create a GlueJobRole with permissions to this bucket.

Add the yellow_tripdata_2025-01.parquet file to the folder.

Obtain the CloudFormation stack file. Navigate to the CloudFormation console. Select Create stack. Select Add a template file and choose the CloudFormation template you downloaded. Select Subsequent.

Enter a novel identify for Stack identify.

Configure the stack parameters. Default values are supplied within the following desk:

Parameter

Default worth

Description

ScriptBucketName

N/A – user-supplied

Identify of the referenced Amazon S3 common function bucket containing the AWS Glue job scripts

DatabaseName

iceberg_dq_demo

Identify of the AWS Glue Database to be created for the Apache Iceberg desk on common function Amazon S3 bucket

GlueIcebergJobName

create_iceberg_table_on_s3

The identify of the created AWS Glue job that creates the Apache Iceberg desk on common function Amazon S3 bucket

GlueS3TableJobName

create_s3_table_on_s3_bucket

The identify of the created AWS Glue job that creates the Amazon S3 desk

S3TableBucketName

dataquality-demo-bucket

Identify of the Amazon S3 desk bucket to be created.

S3TableNamespaceName

s3_table_dq_demo

Identify of the Amazon S3 desk bucket namespace to be created

S3TableTableName

ny_taxi

Identify of the Amazon S3 desk to be created by the AWS Glue job

IcebergTableName

ny_taxi

Identify of the Apache Iceberg desk on common function Amazon S3 to be created by the AWS Glue job

IcebergScriptPath

scripts/create_iceberg_table_on_s3.py

The referenced Amazon S3 path to the AWS Glue script file for the Apache Iceberg desk creation job. Confirm the file identify matches the corresponding GlueIcebergJobName

S3TableScriptPath

scripts/create_s3_table_on_s3_bucket.py

The referenced Amazon S3 path to the AWS Glue script file for the Amazon S3 desk creation job. Confirm the file identify matches the corresponding GlueS3TableJobName

InputS3Bucket

N/A – user-supplied bucket

Identify of the referenced Amazon S3 bucket with which the NY Taxi information was uploaded

InputS3Path

nyc_yellow_trip_data

The referenced Amazon S3 path with which the NY Taxi information was uploaded

OutputBucketName

N/A – user-supplied

Identify of the created Amazon S3 common function bucket for the AWS Glue job for Apache Iceberg desk information

Full the next steps to configure AWS Id and Entry Administration (IAM) and Lake Formation permissions:

If you happen to haven’t beforehand labored with S3 Tables and analytics companies, navigate to Amazon S3.

Select Desk buckets.

Select Allow integration to allow analytics service integrations together with your S3 desk buckets.

Navigate to the Sources tab in your AWS CloudFormation stack. Notice the IAM function with the logical ID GlueJobRole and the database identify with the logical ID GlueDatabase. Moreover, word the identify of the S3 desk bucket with the logical ID S3TableBucket in addition to the namespace identify with the logical ID S3TableBucketNamespace. The S3 desk bucket identify is the portion of the Amazon Useful resource Identify (ARN) which follows: arn:aws:s3tables:::bucket/{S3 Desk bucket Identify}. The namespace identify is the portion of the namespace ARN which follows: arn:aws:s3tables:::bucket/{S3 Desk bucket Identify}|{namespace identify}.

Navigate to the Lake Formation console with a Lake Formation information lake administrator.

Navigate to the Databases tab and choose your GlueDatabase. Notice the chosen default catalog ought to match your AWS account ID.

Choose the Actions dropdown menu and beneath Permissions, select Grant.

Grant your GlueJobRole from step 4 the mandatory permissions. Below Database permissions, choose Create desk and Describe, as proven within the following screenshot.

Navigate again to the Databases tab in Lake Formation and choose the catalog that matches with the worth of S3TableBucket you famous in step 4 within the format: :s3tablescatalog/

Choose your namespace identify. From the Actions dropdown menu, beneath Permissions, select Grant.

Grant your GlueJobRole from step 4 the mandatory permissions Below Database permissions, choose Create desk and Describe, as proven within the following screenshot.

To run the roles created within the CloudFormation stack to create the pattern tables and configure Lake Formation permissions for the DataQualityRole, full the next steps:

Within the Sources tab of your CloudFormation stack, word the AWS Glue job names for the logical useful resource IDs: GlueS3TableJob and GlueIcebergJob.

Navigate to the AWS Glue console and choose ETL jobs. Choose your GlueIcebergJob from step 11 and select Run job. Choose your GlueS3TableJob and select Run job.

To confirm the profitable creation of your Apache Iceberg desk on common function S3 bucket within the database, navigate to Lake Formation together with your Lake Formation information lake administrator permissions. Below Databases, choose your GlueDatabase. The chosen default catalog ought to match your AWS account ID.

On the dropdown menu, select View after which Tables. You must see a brand new tab with the desk identify you specified for IcebergTableName. You may have verified the desk creation.

Choose this desk and grant your DataQualityRole (-DataQualityRole-) the mandatory Lake Formation permissions by selecting the Grant hyperlink within the Actions tab. Select Choose, Describe from Desk permissions for the brand new Apache Iceberg desk.

To confirm the S3 desk within the S3 desk bucket, navigate to Databases within the Lake Formation console together with your Lake Formation information lake administrator permissions. Be certain the chosen catalog is your S3 desk bucket catalog: :s3tablescatalog/

Choose your S3 desk namespace and select the dropdown menu View.

Select Tables and you must see a brand new tab with the desk identify you specified for S3TableTableName. You may have verified the desk creation.

Select the hyperlink for the desk and beneath Actions, select Grant. Grant your DataQualityRole the mandatory Lake Formation permissions. Select Choose, Describe from Desk permissions for the S3 desk.

Within the Lake Formation console together with your Lake Formation information lake administrator permissions, on the Administration tab, select Knowledge lake areas .

Select Register location. Enter your OutputBucketName because the Amazon S3 path. Enter the LakeFormationRole from the stack assets because the IAM function. Below Permission mode, select Lake Formation.

On the Lake Formation console beneath Software integration settings, choose Permit exterior engines to entry information in Amazon S3 areas with full desk entry, as proven within the following screenshot.

Generate suggestions for Apache Iceberg desk on common function S3 bucket managed by Lake Formation

On this part, we present how one can generate information high quality guidelines utilizing the info high quality rule suggestions function of AWS Glue Knowledge High quality in your Apache Iceberg desk on a common function S3 bucket. Observe these steps:

Navigate to the AWS Glue console. Below Knowledge Catalog, select Databases. Select the GlueDatabase.

Below Tables, choose your IcebergTableName. On the Knowledge high quality tab, select Run historical past.

Below Advice runs, select Advocate guidelines.

Use the DataQualityRole (-DataQualityRole-) to generate information high quality rule suggestions, leaving the opposite settings as default. The outcomes are proven within the following screenshot.

Run information high quality guidelines for Apache Iceberg desk on common function S3 bucket managed by Lake Formation

On this part, we present how one can create an information high quality ruleset with the advisable guidelines. After creating the ruleset, we run the info high quality guidelines. Observe these steps:

Copy the ensuing guidelines out of your suggestion run by choosing the dq-run ID and selecting Copy.

Navigate again to the desk beneath the Knowledge high quality tab and select Create information high quality guidelines. Paste the ruleset from step 1 right here. Select Save ruleset, as proven within the following screenshot.

After saving your ruleset, navigate again to the Knowledge High quality tab in your Apache Iceberg desk on the final function S3 bucket. Choose the ruleset you created. To run the info high quality analysis run on the ruleset utilizing your information high quality function, select Run, as proven within the following screenshot.

Generate suggestions for the S3 desk on the S3 desk bucket

On this part, we present how one can use the AWS Command Line Interface (AWS CLI) to generate suggestions in your S3 desk on the S3 desk bucket. This may even create an information high quality ruleset for the S3 desk. Observe these steps:

Fill in your S3 desk namespace identify, S3 desk desk identify, Catalog ID, and Knowledge High quality function ARN within the following JSON file and put it aside regionally:

{

“DataSource”: {

“GlueTable”: {

“DatabaseName”: “”,

“TableName”: “”,

“CatalogId”: “:s3tablescatalog/”

}

},

“Function”: “”,

“NumberOfWorkers”: 5,

“Timeout”: 120,

“CreatedRulesetName”: “data_quality_s3_table_demo_ruleset”

}

Enter the next AWS CLI command changing native file identify and area with your personal info:

aws glue start-data-quality-rule-recommendation-run –cli-input-json file:// –region

Run the next AWS CLI command to substantiate the advice run succeeds:

Run information high quality guidelines for the S3 desk on the S3 desk bucket

On this part, we present how one can use the AWS CLI to judge the info high quality ruleset on the S3 tables bucket that we simply created. Observe these steps:

Exchange S3 desk namespace identify, S3 tables desk identify, Catalog ID, and Knowledge High quality function ARN with your personal info within the following JSON file and put it aside regionally:

{

“DataSource”: {

“GlueTable”: {

“DatabaseName”: “”,

“TableName”: “”,

“CatalogId”: “:s3tablescatalog/”

}

},

“Function”: “<>”,

“NumberOfWorkers”: 2,

“Timeout”: 120,

“AdditionalRunOptions”: {

“CloudWatchMetricsEnabled”: true,

“CompositeRuleEvaluationMethod”: “COLUMN”

},

“RulesetNames”: (“data_quality_s3_table_demo_ruleset”)

}

Run the next AWS CLI command changing native file identify and area together with your info:

aws glue start-data-quality-ruleset-evaluation-run –cli-input-json file:// –region

Run the next AWS CLI command changing area and information high quality run ID together with your info:

View ends in SageMaker Unified Studio

Full the next steps to view outcomes out of your information high quality analysis runs in SageMaker Unified Studio:

Log in to the SageMaker Unified Studio portal utilizing your single sign-on (SSO).

Navigate to your undertaking and word the undertaking function ARN

Navigate to the Lake Formation console together with your Lake Formation information lake administrator permissions. Choose your Apache Iceberg desk that you just created on common function S3 bucket and select Grant from the Actions dropdown menu. Grant the next Lake Formation permissions to your SageMaker Unified Studio undertaking function from step 2:

Describe for Desk permissions and Grantable permissions

Subsequent, choose your S3 Desk from the S3 Desk bucket catalog in Lake Formation and select Grant from the Actions drop-down. Grant the under Lake Formation permissions to your SageMaker Unified Studio undertaking function from step 2:

Describe for Desk permissions and Grantable permissions

Observe the steps at Create an Amazon SageMaker Unified Studio information supply for AWS Glue within the undertaking catalog to configure your information supply in your GlueDatabase and your S3 tables namespace.

Select a reputation and optionally enter an outline in your information supply particulars.

Select AWS Glue (Lakehouse) in your Knowledge supply sort. Depart connection and information lineage because the default values.

Select Use the AwsDataCatalog for the Apache Iceberg desk on common function S3 bucket AWS Glue database.

Select the Database identify equivalent to the GlueDatabase.Select Subsequent.

Below Knowledge high quality, choose Allow information high quality for this information supply. Depart the remainder of the defaults.

Configure the following information supply with a reputation in your S3 desk namespace. Optionally, enter an outline in your information supply particulars.

Select AWS Glue (Lakehouse) in your Knowledge supply sort. Depart connection and information lineage because the default values.

Select to enter the catalog identify: s3tablescatalog/

Select the Database identify equivalent to the S3 desk namespace. Select Subsequent.

Choose Allow information high quality for this information supply. Depart the remainder of the defaults.

Run every dataset.

Navigate to your undertaking’s Property and choose the associated asset that you just created for Apache Iceberg desk on common function S3 bucket. Navigate to the Knowledge High quality tab to view your information high quality outcomes. You must be capable of see the info high quality outcomes for the S3 desk asset equally.

The information high quality ends in the next screenshot present every rule evaluated within the chosen information high quality analysis run and its consequence. The information high quality rating calculates the proportion of guidelines that handed, and the overview exhibits how sure rule sorts faired throughout the analysis. For instance, Completeness rule sorts all handed, however ColumnValues rule sorts handed solely three out of 9 occasions.

Cleanup

To keep away from incurring future costs, clear up the assets you created throughout this walkthrough:

Navigate to the weblog put up output bucket and delete its contents.

Un-register the info lake location in your output bucket in Lake Formation

Revoke the Lake Formation permissions in your SageMaker undertaking function, in your information high quality function, and in your AWS Glue job function.

Delete the enter information file and the job scripts out of your bucket.

Delete the S3 desk.

Delete the CloudFormation stack.

(Optionally available) Delete your SageMaker Unified Studio area and the related CloudFormation stacks it created in your behalf.

Conclusion

On this put up, we demonstrated how one can now generate information high quality suggestion in your lakehouse structure utilizing Apache Iceberg tables on common function Amazon S3 buckets and Amazon S3 Tables. Then we confirmed how one can combine and look at these information high quality ends in Amazon SageMaker Unified Studio. Do that out in your personal use case and share your suggestions and questions within the feedback.

In regards to the Authors

Brody Pearman is a Senior Cloud Help Engineer at Amazon Net Providers (AWS). He’s obsessed with serving to prospects use AWS Glue ETL to remodel and create their information lakes on AWS whereas sustaining excessive information high quality. In his free time, he enjoys watching soccer together with his buddies and strolling his canine.

Brody Pearman is a Senior Cloud Help Engineer at Amazon Net Providers (AWS). He’s obsessed with serving to prospects use AWS Glue ETL to remodel and create their information lakes on AWS whereas sustaining excessive information high quality. In his free time, he enjoys watching soccer together with his buddies and strolling his canine.

Shiv Narayanan is a Technical Product Supervisor for AWS Glue’s information administration capabilities like information high quality, delicate information detection and streaming capabilities. Shiv has over 20 years of knowledge administration expertise in consulting, enterprise improvement and product administration.

Shiv Narayanan is a Technical Product Supervisor for AWS Glue’s information administration capabilities like information high quality, delicate information detection and streaming capabilities. Shiv has over 20 years of knowledge administration expertise in consulting, enterprise improvement and product administration.

Shriya Vanvari is a Software program Developer Engineer in AWS Glue. She is obsessed with studying how one can construct environment friendly and scalable methods to supply higher expertise for purchasers. Outdoors of labor, she enjoys studying and chasing sunsets.

Shriya Vanvari is a Software program Developer Engineer in AWS Glue. She is obsessed with studying how one can construct environment friendly and scalable methods to supply higher expertise for purchasers. Outdoors of labor, she enjoys studying and chasing sunsets.

Narayani Ambashta is an Analytics Specialist Options Architect at AWS, specializing in the automotive and manufacturing sector, the place she guides strategic prospects in creating fashionable information and AI methods. With over 15 years of cross-industry expertise, she focuses on massive information structure, real-time analytics, and AI/ML applied sciences, serving to organizations implement fashionable information architectures. Her experience spans throughout lakehouse structure, generative AI, and IoT platforms, enabling prospects to drive digital transformation initiatives. When not architecting fashionable options, she enjoys staying energetic by way of sports activities and yoga.

Narayani Ambashta is an Analytics Specialist Options Architect at AWS, specializing in the automotive and manufacturing sector, the place she guides strategic prospects in creating fashionable information and AI methods. With over 15 years of cross-industry expertise, she focuses on massive information structure, real-time analytics, and AI/ML applied sciences, serving to organizations implement fashionable information architectures. Her experience spans throughout lakehouse structure, generative AI, and IoT platforms, enabling prospects to drive digital transformation initiatives. When not architecting fashionable options, she enjoys staying energetic by way of sports activities and yoga.

your blog is fantastic! I’m learning so much from the way you share your thoughts.