Organizations are more and more required to derive real-time insights from their information whereas sustaining the power to carry out analytics. This twin requirement presents a big problem: learn how to successfully bridge the hole between streaming information and analytical workloads with out creating advanced, hard-to-maintain information pipelines. On this publish, we exhibit learn how to simplify this course of utilizing Amazon Knowledge Firehose (Firehose) to ship streaming information on to Apache Iceberg tables in Amazon SageMaker Lakehouse, making a streamlined pipeline that reduces complexity and upkeep overhead.

Streaming information empowers AI and machine studying (ML) fashions to be taught and adapt in actual time, which is essential for functions that require rapid insights or dynamic responses to altering situations. This creates new alternatives for enterprise agility and innovation. Key use circumstances embrace predicting gear failures primarily based on sensor information, monitoring provide chain processes in actual time, and enabling AI functions to reply dynamically to altering situations. Actual-time streaming information helps clients make fast choices, essentially altering how companies compete in real-time markets.

Amazon Knowledge Firehose seamlessly acquires, transforms, and delivers information streams to lakehouses, information lakes, information warehouses, and analytics providers, with computerized scaling and supply inside seconds. For analytical workloads, a lakehouse structure has emerged as an efficient answer, combining one of the best parts of knowledge lakes and information warehouses. Apache Iceberg, an open desk format, permits this transformation by offering transactional ensures, schema evolution, and environment friendly metadata dealing with that had been beforehand solely accessible in conventional information warehouses. SageMaker Lakehouse unifies your information throughout Amazon Easy Storage Service (Amazon S3) information lakes, Amazon Redshift information warehouses, and different sources, and offers you the flexibleness to entry your information in-place with Iceberg-compatible instruments and engines. By utilizing SageMaker Lakehouse, organizations can harness the facility of Iceberg whereas benefiting from the scalability and adaptability of a cloud-based answer. This integration removes the standard limitations between information storage and ML processes, so information staff can work straight with Iceberg tables of their most popular instruments and notebooks.

On this publish, we present you learn how to create Iceberg tables in Amazon SageMaker Unified Studio and stream information to those tables utilizing Firehose. With this integration, information engineers, analysts, and information scientists can seamlessly collaborate and construct end-to-end analytics and ML workflows utilizing SageMaker Unified Studio, eradicating conventional silos and accelerating the journey from information ingestion to manufacturing ML fashions.

Answer overview

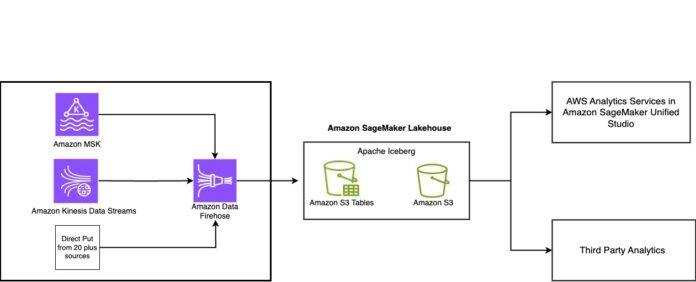

The next diagram illustrates the structure of how Firehose can ship real-time information to SageMaker Lakehouse.

This publish consists of an AWS CloudFormation template to arrange supporting sources so Firehose can ship streaming information to Iceberg tables. You’ll be able to overview and customise it to fit your wants. The template performs the next operations:

Conditions

For this walkthrough, you need to have the next stipulations:

After you create the stipulations, confirm you’ll be able to log in to SageMaker Unified Studio and the venture is created efficiently. Each venture created in SageMaker Unified Studio will get a venture location and venture IAM function, as highlighted within the following screenshot.

Create an Iceberg desk

For this answer, we use Amazon Athena because the engine for our question editor. Full the next steps to create your Iceberg desk:

In SageMaker Unified Studio, on the Construct menu, select Question Editor.

Select Athena because the engine for question editor and select the AWS Glue database created for the venture.

Use the next SQL assertion to create the Iceberg desk. Be sure to supply your venture AWS Glue database and venture Amazon S3 location (might be discovered on the venture overview web page):

CREATE TABLE firehose_events (

sort struct,

customer_id string,

event_timestamp timestamp,

area string)

LOCATION ‘/iceberg/occasions’

TBLPROPERTIES (

‘table_type’=’iceberg’,

‘write_compression’=’zstd’

);

Deploy the supporting sources

The subsequent step is to deploy the required sources into your AWS atmosphere through the use of a CloudFormation template. Full the next steps:

Select Launch Stack.![]()

Select Subsequent.

Depart the stack identify as firehose-lakehouse.

Present the consumer identify and password that you just need to use for accessing the Amazon Kinesis Knowledge Generator utility.

For DatabaseName, enter the AWS Glue database identify.

For ProjectBucketName, enter the venture bucket identify (positioned on the SageMaker Unified Studio venture particulars web page).

For TableName, enter the desk identify created in SageMaker Unified Studio.

Select Subsequent.

Choose I acknowledge that AWS CloudFormation would possibly create IAM sources and select Subsequent.

Full the stack.

Create a Firehose stream

Full the next steps to create a Firehose stream to ship information to Amazon S3:

On the Firehose console, select Create Firehose stream.

For Supply, select Direct PUT.

For Vacation spot, select Apache Iceberg Tables.

This instance chooses Direct PUT because the supply, however you’ll be able to apply the identical steps for different Firehose sources, corresponding to Amazon Kinesis Knowledge Streams and Amazon Managed Streaming for Apache Kafka (Amazon MSK).

For Firehose stream identify, enter firehose-iceberg-events.

Accumulate the database identify and desk identify from the SageMaker Unified Studio venture to make use of within the subsequent step.

Within the Vacation spot settings part, allow Inline parsing for routing data and supply the database identify and desk identify from the earlier step.

Be sure you enclose the database and desk names in double quotes if you wish to ship information to a single database and desk. Amazon Knowledge Firehose also can route data to totally different tables primarily based on the content material of the report. For extra data, seek advice from Route incoming data to totally different Iceberg tables.

Underneath Buffer hints, scale back the buffer dimension to 1 MiB and the buffer interval to 60 seconds. You’ll be able to fine-tune these settings primarily based in your use case latency wants.

Within the Backup settings part, enter the S3 bucket created by the CloudFormation template (s3://firehose-demo-iceberg–) and the error output prefix (error/events-1/).

Within the Superior settings part, allow Amazon CloudWatch error logging to troubleshoot any failures, and in for Current IAM roles, select the function that begins with Firehose-Iceberg-Stack-FirehoseIamRole-*, created by the CloudFormation template.

Select Create Firehose stream.

Generate streaming information

Use the Amazon Kinesis Knowledge Generator to publish information data into your Firehose stream:

On the AWS CloudFormation console, select Stacks within the navigation pane and open your stack.

Choose the nested stack for the generator, and go to the Outputs tab.

Select the Amazon Kinesis Knowledge Generator URL.

Enter the credentials that you just outlined when deploying the CloudFormation stack.

Select the AWS Area the place you deployed the CloudFormation stack and select your Firehose stream.

For the template, change the default values with the next code:

{

“sort”: {

“system”: “{{random.arrayElement((“cell”, “desktop”, “pill”))}}”,

“occasion”: “{{random.arrayElement((“firehose_events_1”, “firehose_events_2″))}}”,

“motion”: “replace”

},

“customer_id”: “{{random.quantity({ “min”: 1, “max”: 1500})}}”,

“event_timestamp”: “{{date.now(“YYYY-MM-DDTHH:mm:ss.SSS”)}}”,

“area”: “{{random.arrayElement((“pdx”, “nyc”))}}”

}

Earlier than sending information, select Take a look at template to see an instance payload.

Select Ship information.

You’ll be able to monitor the progress of the info stream.

Question the desk in SageMaker Unified Studio

Now that Firehose is delivering information to SageMaker Lakehouse, you’ll be able to carry out analytics on that information in SageMaker Unified Studio utilizing totally different AWS analytics providers.

Clear up

It’s typically an excellent observe to scrub up the sources created as a part of this publish to keep away from extra price. Full the next steps:

On the AWS CloudFormation console, select Stacks within the navigation pane.

Choose the stack firehose-lakehouse* and on the Actions menu, select Delete Stack.

In SageMaker Unified Studio, delete the area created for this publish.

Conclusion

Streaming information permits fashions to make predictions or choices primarily based on the most recent data, which is essential for time-sensitive functions. By incorporating real-time information, fashions could make extra correct predictions and choices. Streaming information will help organizations keep away from the prices related to storing and processing giant datasets, as a result of it focuses on probably the most related data. Amazon Knowledge Firehose makes it easy to deliver real-time streaming information to information lakes in Iceberg format and unifying it with different information property in SageMaker Lakehouse, making streaming information accessible by numerous analytics and AI providers in SageMaker Unified Studio to ship real-time insights. Check out the answer to your personal use case, and share your suggestions and questions within the feedback.

Concerning the Authors

Kalyan Janaki is Senior Large Knowledge & Analytics Specialist with Amazon Net Providers. He helps clients architect and construct extremely scalable, performant, and safe cloud-based options on AWS.

Kalyan Janaki is Senior Large Knowledge & Analytics Specialist with Amazon Net Providers. He helps clients architect and construct extremely scalable, performant, and safe cloud-based options on AWS.

Phaneendra Vuliyaragoli is a Product Administration Lead for Amazon Knowledge Firehose at AWS. On this function, Phaneendra leads the product and go-to-market technique for Amazon Knowledge Firehose.

Phaneendra Vuliyaragoli is a Product Administration Lead for Amazon Knowledge Firehose at AWS. On this function, Phaneendra leads the product and go-to-market technique for Amazon Knowledge Firehose.

Maria Ho is a Product Advertising and marketing Supervisor for Streaming and Messaging providers at AWS. She works with providers together with Amazon Managed Streaming for Apache Kafka (Amazon MSK), Amazon Managed Service for Apache Flink, Amazon Knowledge Firehose, Amazon Kinesis Knowledge Streams, Amazon MQ, Amazon Easy Queue Service (Amazon SQS), and Amazon Easy Notification Providers (Amazon SNS).

Maria Ho is a Product Advertising and marketing Supervisor for Streaming and Messaging providers at AWS. She works with providers together with Amazon Managed Streaming for Apache Kafka (Amazon MSK), Amazon Managed Service for Apache Flink, Amazon Knowledge Firehose, Amazon Kinesis Knowledge Streams, Amazon MQ, Amazon Easy Queue Service (Amazon SQS), and Amazon Easy Notification Providers (Amazon SNS).

I love how you write—it’s like having a conversation with a good friend. Can’t wait to read more!This post pulled me in from the very first sentence. You have such a unique voice!Seriously, every time I think I’ll just skim through, I end up reading every word. Keep it up!Your posts always leave me thinking… and wanting more. This one was no exception!Such a smooth and engaging read—your writing flows effortlessly. Big fan here!Every time I read your work, I feel like I’m right there with you. Beautifully written!You have a real talent for storytelling. I couldn’t stop reading once I started.The way you express your thoughts is so natural and compelling. I’ll definitely be back for more!Wow—your writing is so vivid and alive. It’s hard not to get hooked!You really know how to connect with your readers. Your words resonate long after I finish reading.

your blog is fantastic! I’m learning so much from the way you share your thoughts.